We, at the bottom of the military food chain, recount a legend about a list of acronyms, buzz words, and technobabble circulated amongst our betters. “Learn these terms, say them during meetings, and nod gravely,” they say. “Your troops will believe you can see the future, and budgets will fall like manna from the sky.” C4ISR has been on that list for decades, and as buzz words go, it has been buzzier than most. I have always liked the quote misattributed to Einstein postered on many a dorm room wall: “If you can’t explain it simply, you don’t understand it well enough.” In the spirit of not-Einstein, my intent here is to provide the reader with a simple mind map for much of the alphabet soup supped during those meetings, and often repeated in impenetrable articles forwarded to us by bespectacled smart people.

Four Boxes

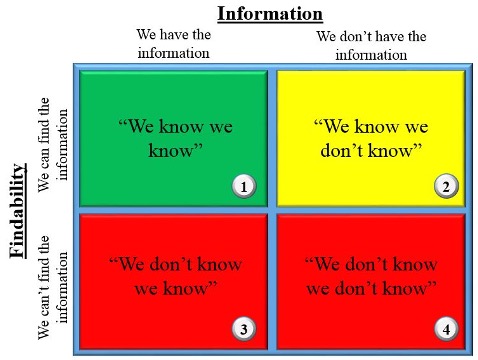

I am an Intelligence Officer and tend to see the world through that lens. However, everything here should be understood to apply to any aspect of the military information economy. We are essentially involved in the same game: providing leadership with a knowledge advantage in the context of competitive decision making. Intelligence tries to provide a Commander with that knowledge regarding the adversary; Operations provides it regarding friendly forces; Logistics, regarding the movement of resources, and so on. In the simplest form, this advantage is present when we know things; however, it is also necessary that the knowledge be ‘findable.’ Presence of information and the extent to which it is findable will serve as two axes in an explanatory four-square logical construct, resulting in:

Box #1 – “We know we know”

Box #2 – “We know we don’t know”

Box #3 – “We don’t know we know”

Box #4 – “We don’t know we don’t know”

In Box #1, the required knowledge is present, findable, and relevant. Box #1 is always the gold standard and is the enabler best placed to confer the decision-making advantage leaders want. The whole game in a military information economy is to provide leaders with a vantage point in Box #1.

In Box #2, some aspect of the situation is understood well enough to know the ways in which our knowledge is lacking. Perhaps we simply don’t know something significant, or prior knowledge we had has been overtaken by events. Box #2 suggests that we know what information we need, or at the very least, we can temper analyses on related subjects through an understanding of our knowledge gaps.

Box #3 and Box #4 are significant problems and liabilities. Box #3 means that we have information on hand that could support a decision, provide warning, or increases the efficiency of resource allocation, but due to some critical failure of technology, policy, or analysis, we have squandered this opportunity. Box #4 means that we fail to understand the subject enough to even understand what we don’t know, and as such are incapable of even scoping our own ignorance. The red boxes are the states of knowledge in which much political ammunition is given to those who oppose the military on principle, often with calls for explanations as to why resources are allocated to the service if our ignorance level is that high. The scary things with rending claws and bloody fangs that blind-side us when we can least take the hit live in Boxes #3 and #4.

To give a sense of the stakes, the American 9/11 Commission determined that more than enough data existed to anticipate an attack on the World Trade Center towers by Al Queda, but that information was locked in disparate stovepipes and held by petty squabbles between agencies (a Box #3 problem), and that moreover, no one agency had a dedicated group of individuals examining the problem in sufficient depth to be able to understand the true risks, aggressively determine gaps, and either collect the data from other agencies or put in place their own collection plans (a Box #4 problem). What resulted were thousands of deaths, several wars, and immeasurable blood and treasure expended to overcome the Western World’s inability to move leaders’ level of understanding into Box #1. It is exactly this movement of knowledge between the boxes that is key to understanding many of the acronyms and buzzwords that fill the legendary list of technobabble.

Looking at our four-square depiction of states of knowledge, C4ISR can be imagined as all of the various methods that move knowledge towards Box #1. C4ISR stands for Command, Control, Communications, Computers, Intelligence, Surveillance, and Reconnaissance. Command and Control defines the questions we ask in Box #2, and why we provide the answers in Box#1. Communications allows us to place the call to tell the Commander what we learned. Computers may be the means we use to aggregate data that resides in Box #3, analysis of which might result in a better understanding of questions in Box #2, thence to a Box #1 understanding. Intelligence, Surveillance, and Reconnaissance (henceforth, ISR) is about collecting the answers to Box #2 questions through whatever means are available to us, be it technical collection such as SIGINT or MASINT, interactions with the people on our Areas of Operations (Intelligence nerds like me refer to that as HUMINT), liaison with partner forces, or perhaps a simple Google search (OSINT). For the record, C4ISR, JISR, Intelligence, and all of the collection disciplines have doctrinal definitions resplendent with adjectives that are worth reading; however, at the end of the day, what they all do is let Commanders make decisions while sitting in Box #1.

Ladders

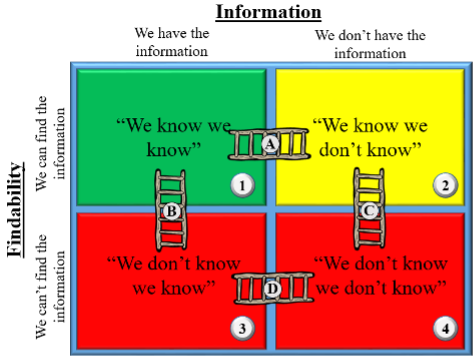

If moving our knowledge to Box #1 is the game, then let’s continue stretching that analogy to a four-square playing surface of snakes and ladders that move information between the squares. Ladders are, by and large, made of C4ISR capabilities, but examining the nature of each ladder individually is useful for understanding the terminology it matters.

In the Intelligence business, ‘Ladder A’ represents the effective use of requests for information (RFIs) or the execution of Collection Operations (read: leveraging of ISR and PED) to construct a better understanding of whatever the Commander needs to know. We refer to the process of prioritizing and answering these questions as Intelligence Requirements Management and Collection Management (IRM & CM), which is informed by Priority Intelligence Requirements (PIRs), and which are, in turn, the questions prioritized for heat and light to move it to Box #1. Breaking those PIRs into answerable binary or empirical questions results in the development of an Intelligence Collection Plan (ICP), but I digress. Operations gets similar direction from the Commander regarding information priorities in the form of CCIRs; these are, effectively, a list of things that need to be moved into Box #1 without delay whenever possible due to the gravity of what they mean.

I regard ‘Ladder B’ as a terrifying form of serendipity. It can result from the collapsing of stovepipes, either within or between institutions, which has the effect of creating a larger sample of informational ‘noise,’ from which correlations that were previously indistinguishable can provide a ‘signal.’ ‘Ladder B’ is generally a good thing and should be humbly recognized as a welcome invader from outside our institutional tunnel vision, and a lesson on how to better exploit information in future.

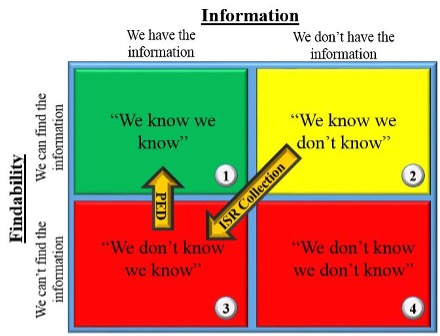

The execution of Collection Operations is a potential form of ‘Ladder A’; however, it is important to realize that there is an intermediate step that is necessary to avoid the collection becoming a resident of Box #3, namely Processing, Exploitation, and Dissemination (PED).

The knowledge gap in Box #2 prompts collection, intending to create a happy little ‘Ladder A’ migration; however, directly after collection, the data exists without being understood, thus an inadvertent migration to Box #3. The point of PED is to turn this collected data into meaning as it relates to a collection requirement (CR), and then transfer it to an All-Source Analyst who is able to provide contextual relevance, bringing the process home to Box #1. Why the recent redirection of assets to developing PED expertise and the related networks? Because the organization spends time and treasure to collect answers to our questions, all of which is wasted unless those answers make it to Box #1. Put another way, collection without PED and the enabling C4ISR storage and transmission infrastructure is largely a waste of time and resources.

Transitioning out of Box #4 is slightly more complicated, in that there is no direct logical path to Box #1, and instead, the requirement must transit through Box #2 or Box #3 prior to being resolved. With this in mind, ‘Ladder C’ implies that a renewed analytic effort has been undertaken that has revealed a blind spot in our understanding, so we start to contemplate previously unconsidered questions, with the intent to move the collected answers to ‘Ladder A.’ ‘Ladder D’, by comparison, implies that we have come into possession of some new source of information that is either unfindable, unprocessed (denoting a lack of PED capacity) or that we lack adequate analysis to integrate the information into our overall understanding of the subject; ideally, a ‘Ladder D’ event is followed by a ‘Ladder B’ event, but as the information is an unknown, unexploited resource, it persists as a potential liability until discovered.

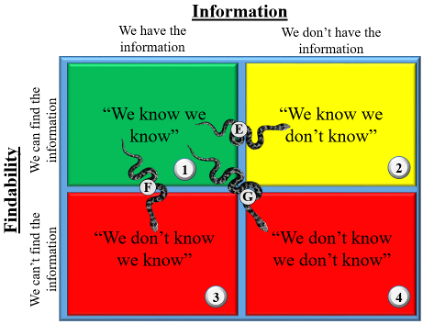

Snakes

Having spent some time describing the ladders, understanding their inverse corollary, the snakes, is equally worthwhile, particularly if we intend to prevent them from breeding (I prefer my metaphors shaken, not stirred).

‘Snake E’ suggests that a collection capability or source of information has ceased to be accessible, so our knowledge has been overtaken by events. ‘Snake F’ suggests that we retain information on the subject, but that it has been misplaced, either due to the loss of a network (read: the collapse of the relevant C4ISR infrastructure), a loss of analytic or technical ability to operationalize the information, or the degradation of storage, search, or databasing functions. ‘Snake G’ implies a complete loss of situational awareness, such as during personnel transitions, mandate changes, loss of manpower, or reallocation of scarce capacity against myriad priorities. The snakes have pet names like “network collapse,” “losing the bubble,” or in the worst cases, “Out to Lunch.” Much like the board game, snakes mean the Commander is losing whatever competitive decision-making process he is engaged in.

So what?

First off, identify and kill the snakes. If you can’t do that, figure out the least painful snake to be bitten by. Ladders are hard to build, so if possible, take the snakebite without feeling the need to destroy a ladder to mitigate the pain.

Second, make sure the effort goes into building the right ladders. Put another way, prioritize what matters most, facilitate the programs that actually answer relevant questions, and don’t forget to foster the C4ISR capabilities from which your ladder will likely be built.

Third, there are those who build and maintain barricades to ladder-builders; they are not snakes per se, but they are in the reptile family. I believe in the sovereignty of Commanders to execute government policy. If properly enabled, they enjoy relative impunity to slay snakes and build ladders at will. If I am loyal to my Commander, I will cry bloody murder on the first sighting of a reptile, perhaps helping the lizard to become part of the ladder building team if I can. Otherwise, the Commander’s sovereignty is obstructed one tiny defensive barricade at a time by those “cold and timid souls who neither know victory nor defeat.”

For the record, that last quote is from the 1910 Man in the Arena speech and can be positively attributed to U.S. President Theodore Roosevelt. I don’t think he meant it to be about ladder builders, but reading it in the morning helps me to believe that I can.